A wide range of humanities data can be analysed, including text (from literature, newspapers and social media), images (from art history and traditional or social media) and material culture (from representations of artefacts, to ethnographic reports of their creation). The creation of structured data in the form of data tables, with features that characterise the different members of the set being investigated, (such as individual artefacts, or communities of potters), is an integral step in data analysis, and often the features chosen differ from those of interest in the original sources (Drucker, 2021; Posner, 2015). Parameterisation, involves deciding which features can be measured (ie the number of words, or length of a vessel part), and these features result in quantitative data. Deciding what constitutes a unit, such as a meaningful word, is called tokenisation.

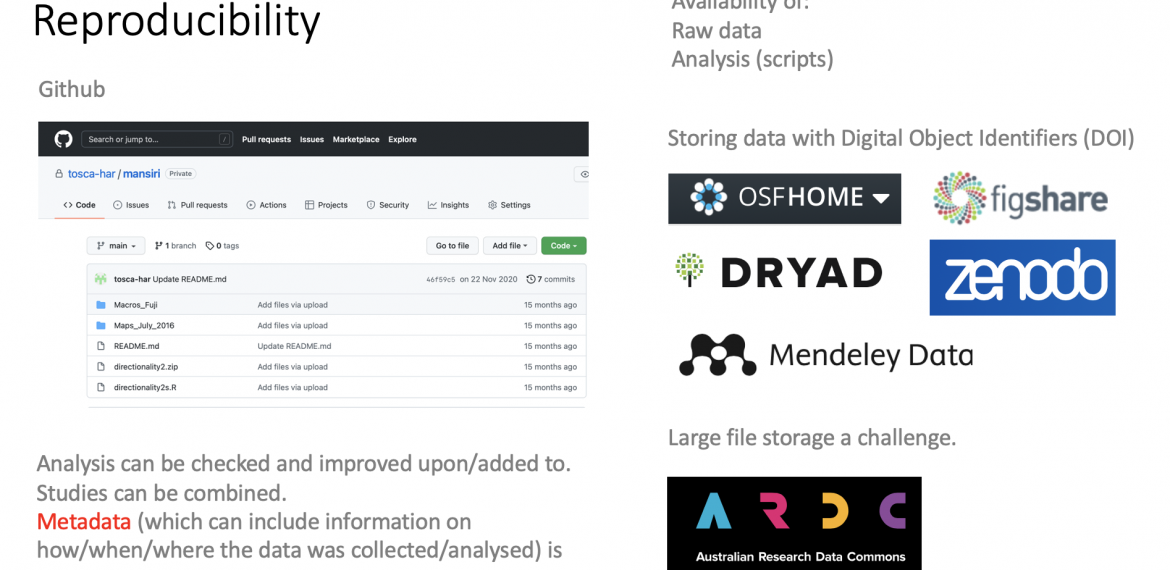

Often analysis involves estimating the similarity between objects in the dataset and determining how they are related to each other, or identifying which features are the most statistically significant. There are multiple advantages to analysing larger datasets in a scripting ‘language’ such as R or Python, whose libraries have implementations of multiple statistical methods, as well as ways to efficiently download multiple records from archives (Hvitfeldt and Silge, 2021; McDaniel, 2014; Van del Rul, 2019). Making the raw data and the analysis scripts available with publication, through providers such as Github, is important for reproducibility. This allows the analysis to be properly understood, verified and potentially extended (using improved methods, or additional data) by others. The use of metadata to describe the data (where/how/when it was collected) and its analysis, adds value to the data, and promotes its re-use.

It is important to critically interpret statistical findings from the data. Feature correlations are not necessarily because the feature is causative. Datasets with many variables are especially susceptible to spurious correlations (Cook and Ranstam, 2017). All data collections and models have biases, and these should be assessed when interpreting analysis results. Many researchers criticise ‘big data’ studies as “fishing expeditions” and argue that they solely give descriptive results, or that nuances can be lost when structuring the data into restricted categories (see Roberts, 2016; Posner, 2015). Keeping clearly defined questions and aims in mind when analysing big data is often essential to avoid “getting lost in the data”.

There are important ethical considerations when working with and releasing humanities data, and these may prevent the publication of the raw data. These considerations generally involve preventing physical (and possibly emotional) harm as a result of the data collection or publication and respecting the wishes of the communities the data concerns. Often it is important that data concerning individuals is de-identified, and that communities give informed consent for how the data will be used. With publicly released data, this can be a challenge, as the data may be analysed by others without donor consultation, and information available from the world wide web, including social media, can sometimes aid in re-identification of individuals.

When used with planning and consideration of biases and caveats, analysis of data can illuminate patterns in the histories of music, literature, material culture and other aspects of the humanities. The growth of the use of ‘reproducible’ data (when ethical), should increase the ability to ‘reuse’ and merge data and analyses and uncover further patterns and discoveries.

References and Links

Cook and Ranstam (2017) Spurious Findings. Brit. J. Surg., 104: 97

Drucker (2021) “Data Modeling and Use.” In The Digital Humanities Coursebook. Routledge.

Hvitfeldt and Silge (2021) Supervised Machine Learning for Text Analysis in R. CRC Press.

McDaniel (2014) Data Mining and the Internet Archive https://programminghistorian.org/en/lessons/data-mining-the-internet-archive

Posner (2015) https://miriamposner.com/blog/humanities-data-a-necessary-contradiction/

Roberts (2016) https://www.datasciencecentral.com/avoid-the-fishing-expedition-approach-to-analytics-projects/

Van del Rul (2019) https://towardsdatascience.com/a-guide-to-mining-and-analysing-tweets-with-r-2f56818fdd16

Languages

Data repositories to (help) make your data reproducible